Tracing PromptFlow applications in LangSmith

Introduction

This tutorial shows how to correctly trace a PromptFlow application in LangSmith. It covers initial setup, creating nested traces between nodes, and integrating LangGraph within a PromptFlow tool.

Prerequisites

Before getting started, make sure you have:

- PromptFlow installed and configured

- LangSmith API key set up in your environment

- Basic understanding of PromptFlow's DAG structure

Setting up LangSmith tracing in PromptFlow

Basic node setup

When creating a PromptFlow application, each node can be traced as a separate trace in LangSmith. To achieve this, you use the @traceable decorator from LangSmith along with the @tool decorator from PromptFlow.

Here's the basic structure for a node:

from promptflow.core import tool

import langsmith as ls

@ls.traceable(run_type="chain")

@tool

def my_tool_function(input_parameter: str) -> str:

# Your logic here

processed = f"Processed: {input_parameter}"

return processed

Creating nested traces

To create nested traces (where one trace is a child of another), you need to:

- Get the current run tree headers in the parent node

- Pass those headers to the child node via the

langsmith_extraparameter - Use those headers in the child node

Parent node example

from promptflow.core import tool

import langsmith as ls

from langsmith.run_helpers import get_current_run_tree

@ls.traceable(run_type="chain")

@tool

def parent_node(input_text: str) -> dict:

# Your custom logic here

processed = f"Processed: {input_text}"

# Get current run tree headers to pass downstream

run_tree = get_current_run_tree()

headers = run_tree.to_headers() if run_tree else {}

return {

"processed": processed,

"langsmith_extra": {

"parent": headers

}

}

Child node example

from promptflow.core import tool

import langsmith as ls

@ls.traceable(run_type="chain")

@tool

def child_node(processed_text: str, langsmith_extra: dict = None) -> str:

# Your logic here

enhanced_text = f"Enhanced: {processed_text}"

return enhanced_text

Flow YAML configuration

Here's how to configure the flow.dag.yaml to connect these nodes with proper trace propagation:

inputs:

user_input:

type: string

outputs:

final_output:

type: string

reference: ${child.output}

nodes:

- name: parent

type: python

source:

type: code

path: parent_node.py

function: parent_node

inputs:

input_text: ${inputs.user_input}

- name: child

type: python

source:

type: code

path: child_node.py

function: child_node

inputs:

processed_text: ${parent.output.processed}

langsmith_extra: ${parent.output.langsmith_extra}

Important considerations

-

Return type: Make sure to correctly specify the return type of your function in the function signature to match what you're actually returning.

-

Headers propagation: Always check if the run_tree exists before calling to_headers() to avoid errors.

Using LangGraph with PromptFlow and LangSmith

You can run a LangGraph graph inside a PromptFlow tool, and it will be automatically traced within LangSmith. Here's an example:

from promptflow.core import tool

import langsmith as ls

from langgraph.prebuilt import create_react_agent

from langchain_openai import ChatOpenAI

from langchain_core.tools import tool as langchain_tool

# Define a tool for the LangGraph agent

@langchain_tool

def get_weather(city):

"""Get weather information for a city."""

if city == "nyc":

return "Cloudy in NYC"

elif city == "sf":

return "Sunny in SF"

else:

return "Unknown city"

# Create the agent

model = ChatOpenAI(model="gpt-4o-mini")

tools = [get_weather]

agent = create_react_agent(model, tools=tools)

@ls.traceable(run_type="chain")

@tool

def graph_node(input_text: str, langsmith_extra: dict = None) -> str:

# Process the input

processed = f"Processing query: {input_text}"

# Invoke the LangGraph agent

response = agent.invoke({

"messages": [{"role": "user", "content": input_text}]

})

return f"Agent response: {response}"

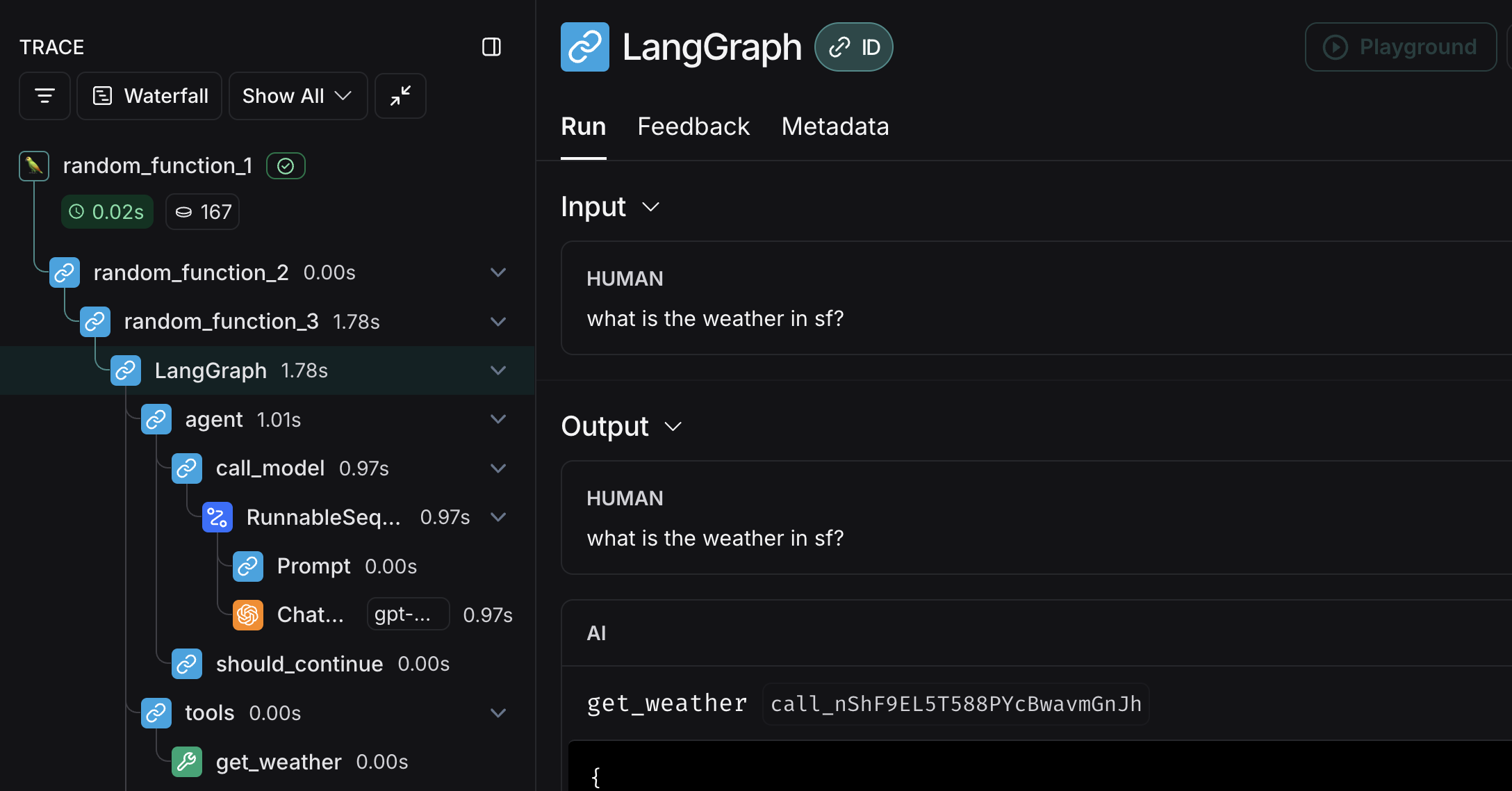

This will produce the following trace tree in LangSmith:

Find out more about nested traces in LangSmith here.